26 agost, 2024

Autors:

Evelina Leivada, Fritz Günther & Vittoria Dentella

Títol:

Reply to Hu et al: Applying different evaluation standards to humans vs. Large Language Models overestimates AI performanceEditorial: PNAS 121(36), e2406752121 (National Academy of Sciences)

Data de publicació: 26 d'agost, 2024

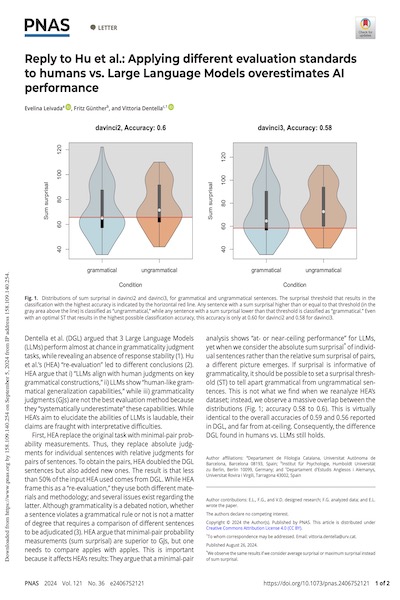

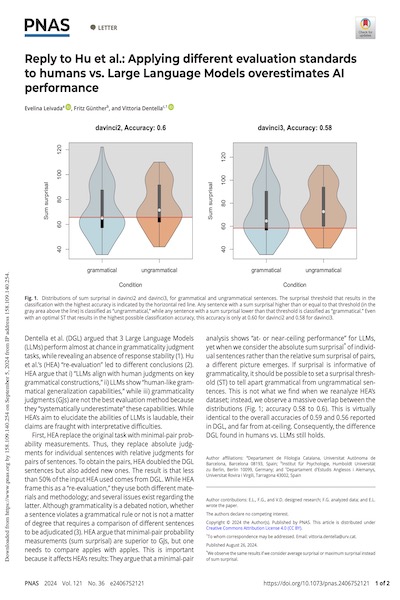

Text completDentella et al. (DGL) argued that 3 Large Language Models (LLMs) perform almost at chance in grammaticality judgment tasks, while revealing an absence of response stability (1). Hu et al.’s (HEA) “re-evaluation” led to different conclusions (2). HEA argue that i) “LLMs align with human judgments on key grammatical constructions,” ii) LLMs show “human-like grammatical generalization capabilities,” while iii) grammaticality judgments (GJs) are not the best evaluation method because they “systematically underestimate” these capabilities. While HEA’s aim to elucidate the abilities of LLMs is laudable, their claims are fraught with interpretative difficulties.

31 desembre, 2022

Autors:

Io Salmons & Anna Gavarró

Títol:

Intervention effects in Catalan agrammatismEditorial: Glossa: a journal of general linguistics (Open Library of Humanities)

Data de publicació: 31 de desembre del 2022

Text completThe goal of the present study is to test the agrammatic comprehension of clitic left dislocation and contrastive focus in Catalan, in order to evaluate the Generalised Minimality hypothesis of Grillo (2009). According to this hypothesis, the comprehension deficit observed in agrammatism is the result of the underspecification of scope-discourse features giving rise to generalised intervention effects. We conducted two sentence-picture matching tasks to assess the comprehension of clitic left dislocation and contrastive focus with nine and seven Broca’s aphasic subjects, respectively, as well as control participants. The results show that the comprehension of SVO sentences and object clitics was preserved, whereas the comprehension of object dislocations and object focalisations was compromised. These findings are consistent with the analysis of the deficit as an instance of generalised intervention effects. Yet, we also examined the prediction that a relevant syntactic feature mismatch between the subject and the object would suffice to block generalised minimality effects; in particular, the number features of subject and object were controlled for. The agrammatic subjects’ performance on mismatched sentences did not differ from their performance on sentences where the subject and the object were matched in number. These findings call the hypothesis into question and stress the need for future research.

17 setembre, 2020

Autors:

Javier Fernández Sánchez & Dennis Ott

Títol:

DislocationsEditorial: Language and Linguistics Compass, Vol.14 issue 9 (John Wiley & Sons Ltd)

Data de publicació: Setembre 2020

Text completDislocation is a kind of construction in which a phrasal constituent (the dislocate) appears at the outer left or right edge of a gap-less clause (its host) that contains a pronominal correlate of the dislocate. Dislocations are widely attested and presumably universally available across languages. The construction raises a number of problems for core assumptions of syntactic theory, in that these assumptions appear to thwart any coherent resolution of the question of how the dislocate relates to the internal structure of its host. This contribution is divided into two parts. In Part 1, we review central empirical properties of dislocation, which, taken together, appear to defy the laws of syntax as commonly assumed. In Part 2, we review key proposals that have emerged over the last decennia to resolve this paradox and restore dislocations to normalcy.

3 octubre, 2024

Autors:

Gemma Repiso-Puigdelliura

Títol:

Preferential use of full glottal stops in vowel-initial glottalization in child speech: Evidence from novel wordsEditorial: Journal of Child Language (Cambridge University Press)

Data de publicació: 3 d'octubre 2024

Text completVowel-initial glottalization constitutes a cue to prosodic prominence, realized on a strength continuum from creaky phonation to complete glottal stops. While there is considerable research on children’s early utilization of acoustic cues for stress marking, less is understood about the specific implementation of vowel-initial glottalization in American English. Eight sequences of function + novel words were elicited from groups of 5-to-8-year-olds, 8-to-11-year-olds, and adults. Children exhibit a similar rate of prevocalic glottalization to adults but differ in its phonetic implementation, producing a higher rate of glottal stops compared to creaky phonation with respect to adults.

26 novembre, 2024

Autors:

Francesc Torres-Tamarit

Títol:

Proceedings of the 39th West Coast Conference on Formal LinguisticsEditorial: Cascadilla Proceedings Project, Somerville, MA, USA

Data de publicació: 2024

Més informació

Text completAccording to Loporcaro's (2015) book on Romance length, contrastive vowel length in northern Italo-Romance is metrically-governed and implicationally distributed. CVL in proparoxytones implies CVL in paroxytones, but not the other way around. Likewise, CVL in paroxytones implies CVL in oxytones, but not vice versa. This paper develops a foot-based OT analysis of CVL in northern Italo-Romance that combines layered feet (Martínez-Paricio & Kager 2015) with uneven trochees (Jacobs 2019). The analysis adequately predicts the implicational distribution of CVL and discards unattested patterns.