TURING

The Language Understanding of Artificial Intelligence Applications

April 2024 – June 2026

Members

Publications

Project TURING (The Language Understanding of Artificial Intelligence Applications) is funded by the National Research State Agency. The language abilities of Large Language Models (LLMs) are a matter of intense, ongoing debate. On the one hand, some scholars believe that such models have passed the Turing test, because they exhibit linguistic behavior that looks indistinguishable from that of a human; possibly even showing strong parallels to how children extrapolate the morphophonological rules of language during first language acquisition. On the other word, other scholars have called for caution, arguing that LLMs are unable to master meaning and grammar. TURING will contribute towards filling the knowledge gap that surrounds the language abilities of LLMs by performing the first systematic investigation of different AI applications, in different languages, while tapping into various domains of language (syntax, morphology, semantics, and pragmatics).

Members

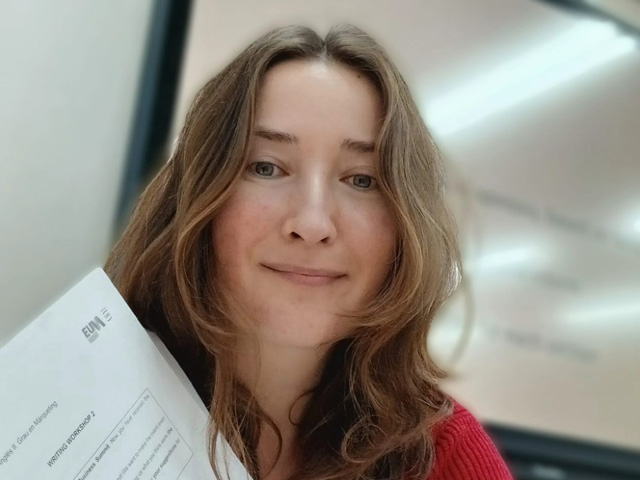

Evelina Leivada (Universitat Autònoma de Barcelona, ICREA)

Principal Investigator

Bilingual and bidialectal acquisition, language development in typical and atypical populations, language variation, theoretical linguistics

Raquel Montero (UAB)

Research Team (Post-doctoral researcher)

Syntax-Semantics Interface, Diachrony, Experimental Methods, Language Models

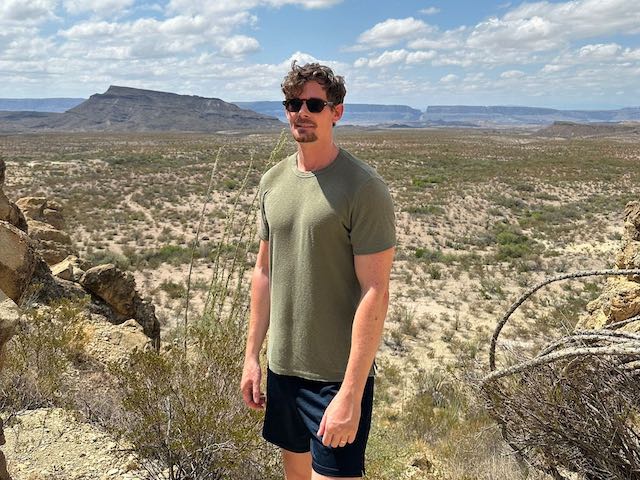

Paolo Morosi (UAB)

Research Team (Post-doctoral researcher)

Syntax-semantics interface; Functional categories; Definiteness; Romance Languages.

Natalia Moskvina (UAB)

Research Team (Post-doctoral researcher)

Language Models, Statistical learning, Formulaic language, Second language aquisition

Publications

Preprints

Philipp Schoenegger, Francesco Salvi, Jiacheng Liu, Xiaoli Nan, Ramit Debnath, Barbara Fasolo, Evelina Leivada, +32 authors. Preprint. Large Language Models are more persuasive than incentivized human persuaders. arXiv.

2025

Evelina Leivada, Gary Marcus, Fritz Günther & Elliot Murphy. 2025. A sentence is worth a thousand pictures: Can Large Language Models understand hum4n l4ngu4ge and the w0rld behind w0rds? In production, Philosophical Transactions of the Royal Society A.

2024

Vittoria Dentella, Fritz Günther, Elliot Murphy, Gary Marcus & Evelina Leivada. 2024. Testing AI on language comprehension tasks reveals insensitivity to underlying meaning. Nature Scientific Reports 14, 28083.

Evelina Leivada, Fritz Günther & Vittoria Dentella. 2024. Reply to Hu et al: Applying different evaluation standards to humans vs. Large Language Models overestimates AI performance. PNAS 121(36), e2406752121.

Evelina Leivada, Vittoria Dentella & Fritz Günther. 2024. Evaluating the language abilities of humans vs. Large Language Models: Three caveats. Biolinguistics 18, e14391.

Projecte CNS2023-1444

The Language Understanding of Artificial Intelligence Applications (TURING)

Agencia Estatal de Investigación